Evaluating the Accuracy of AI-Generated CAD Models

At SnapMagic, our mission is to help engineers design electronics faster. Today, we provide professional-grade CAD models made in collaboration with suppliers, because finding reliable models has always been a major point of friction.

But our long-term vision has always been bigger – we want to help engineers move from idea to completed PCB as quickly as possible.

As we expand our AI-assisted design tool, our team tracks the latest models and analyzes the pros and cons as they relate to generating CAD models and how to get the best out of them with prompting techniques.

Models tested:

- Gemini 3 Deep Think

- Claude Opus 4.6

Our evaluation focused on three core areas:

- Pinout extraction & symbol generation

- Mechanical dimension extraction

- Custom footprint shape reproduction

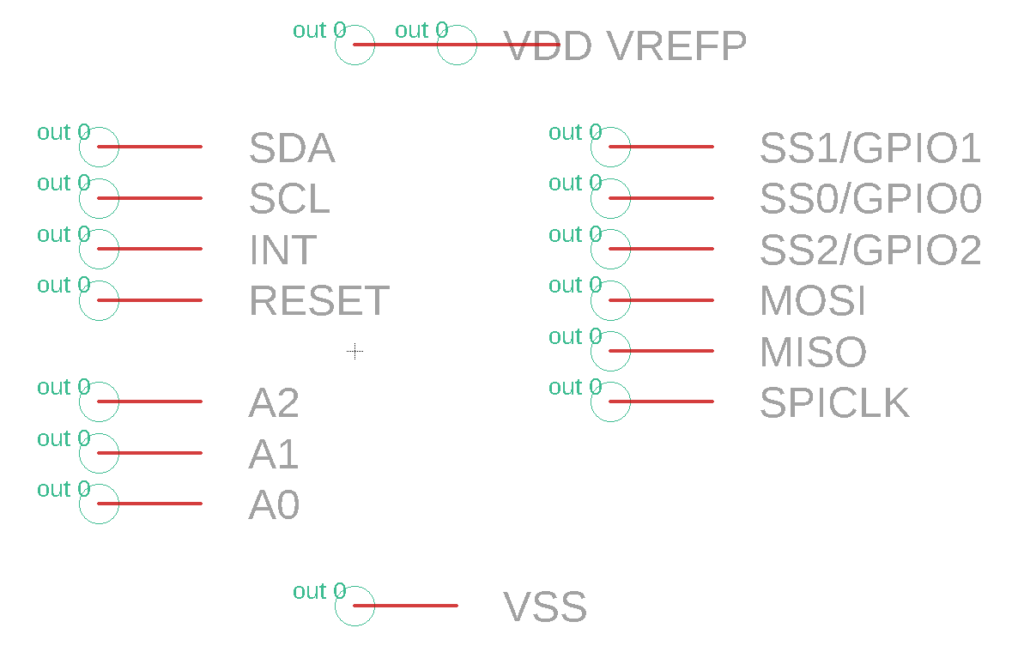

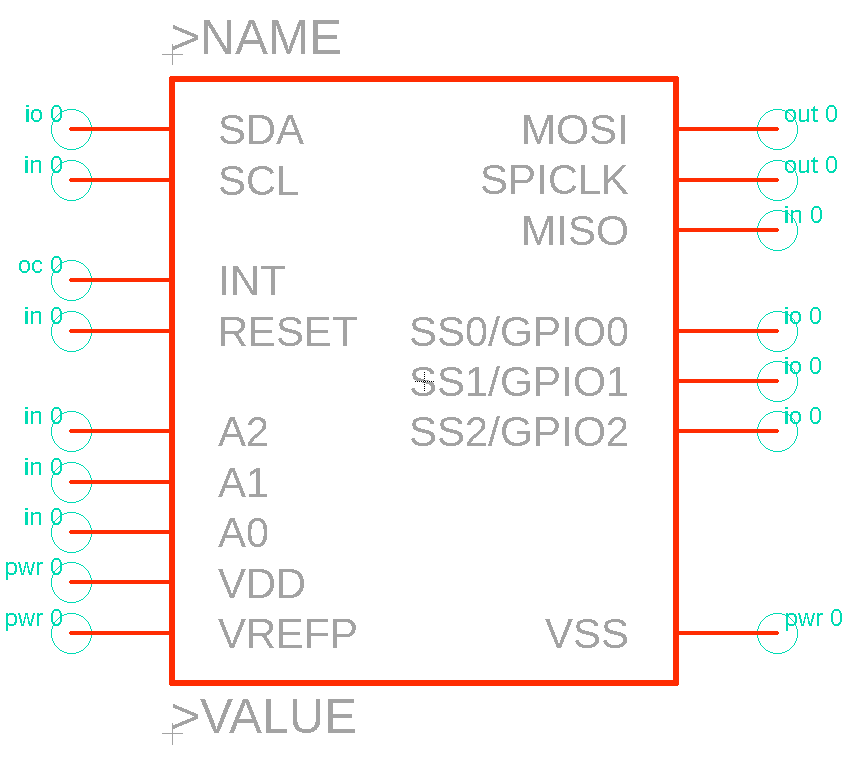

Pinout Extraction & Symbol Generation

| Area of Concern | Gemini 3 Deep Think | Claude Opus 4.6 |

|---|---|---|

| Pin name extraction | Correct | Correct |

| Electrical type assignment | Incorrectly defined | Properly defined |

| Symbol body structure | Missing | Present |

| Overall symbol quality | Rough, unstructured | Usable |

Reference part for testing: SC18IS606PWJ

Gemini 3 Deep Think produced a symbol that lacks structure where body is missing, and electrical types aren’t properly defined.

Claude Opus 4.6, however, shows a stronger grasp of pin functionality, with more sensible electrical type assignments. The output produced a usable schematic symbol.

At this stage, AI still needs oversight with a rule+AI hybrid integration and manual human review to produce a symbol that’s truly design-ready.

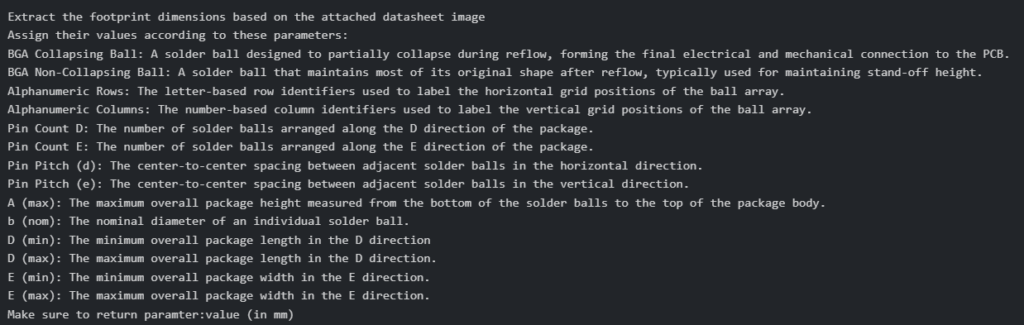

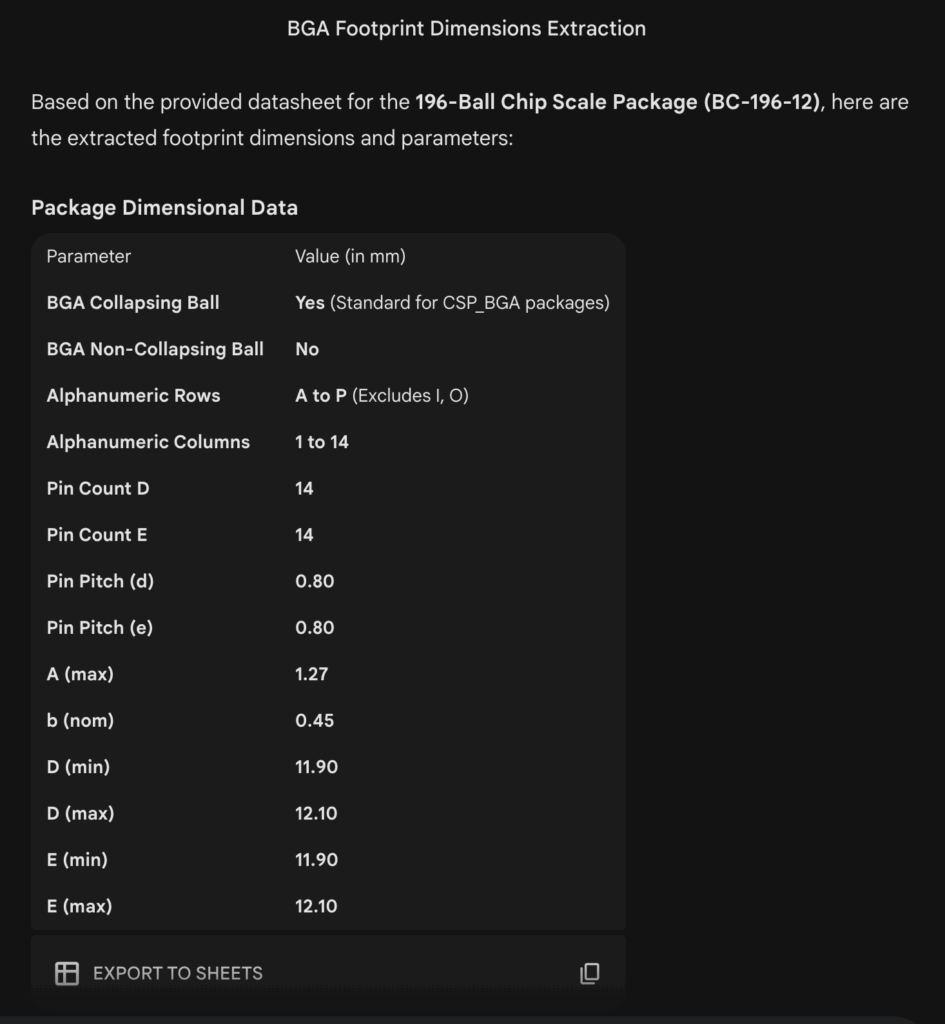

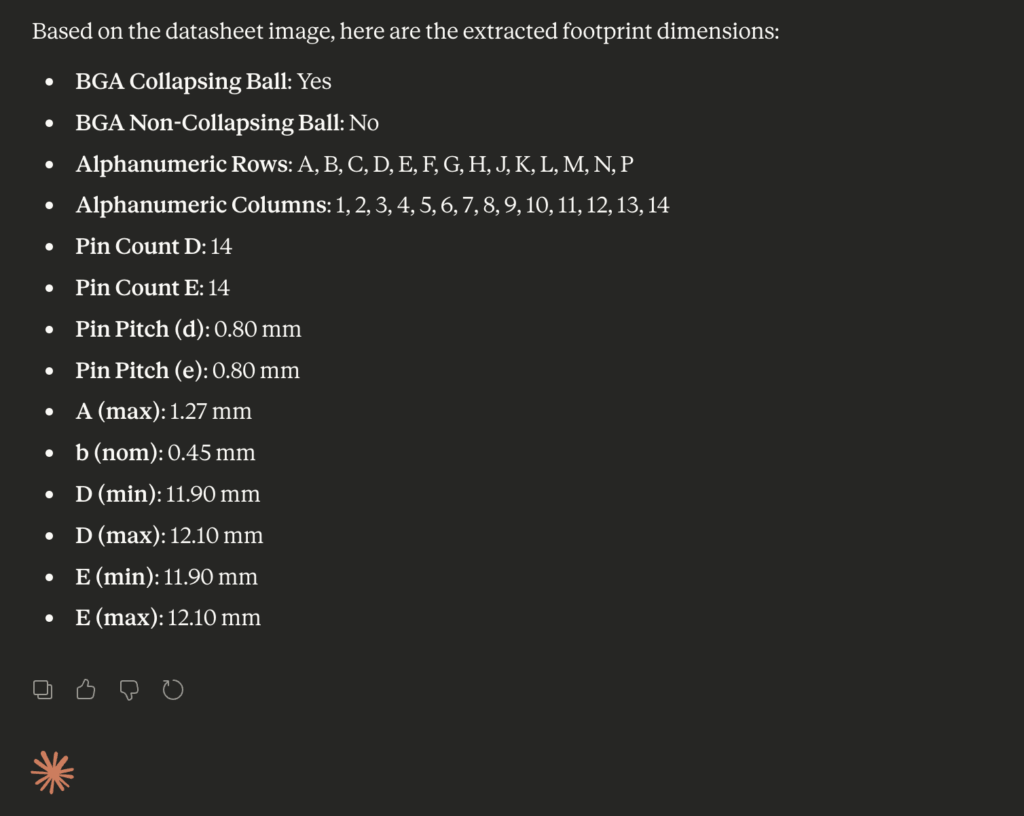

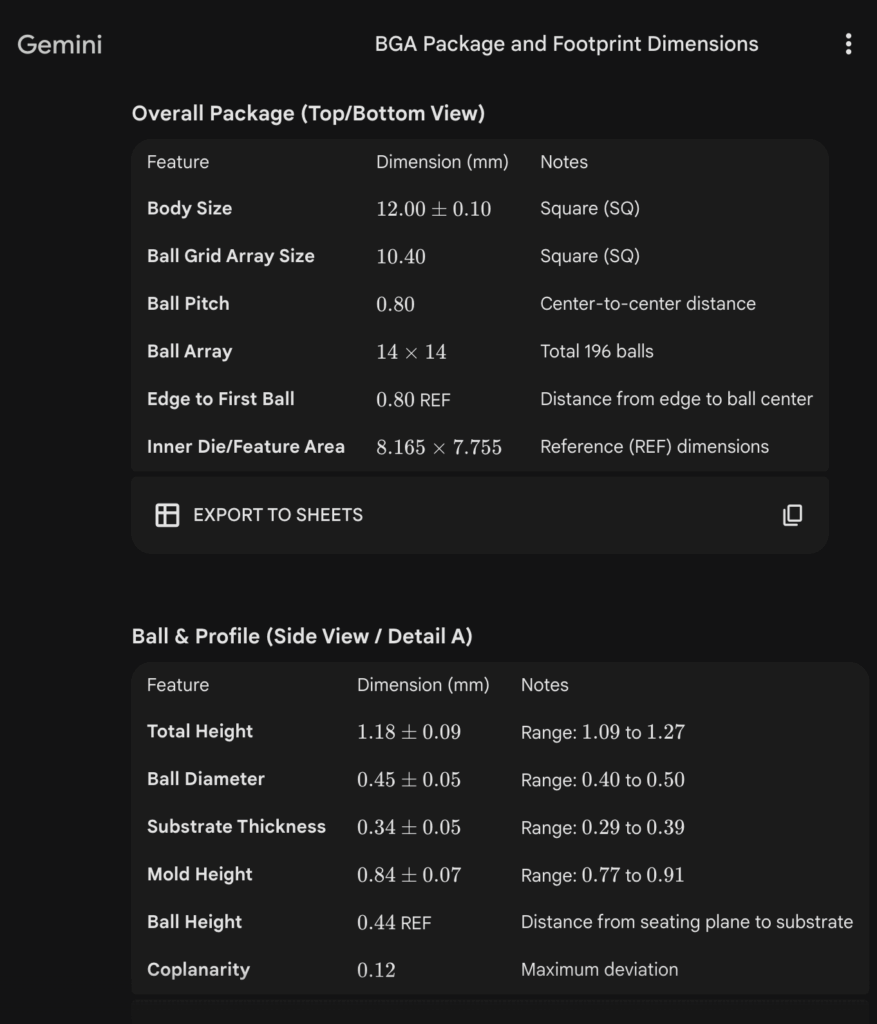

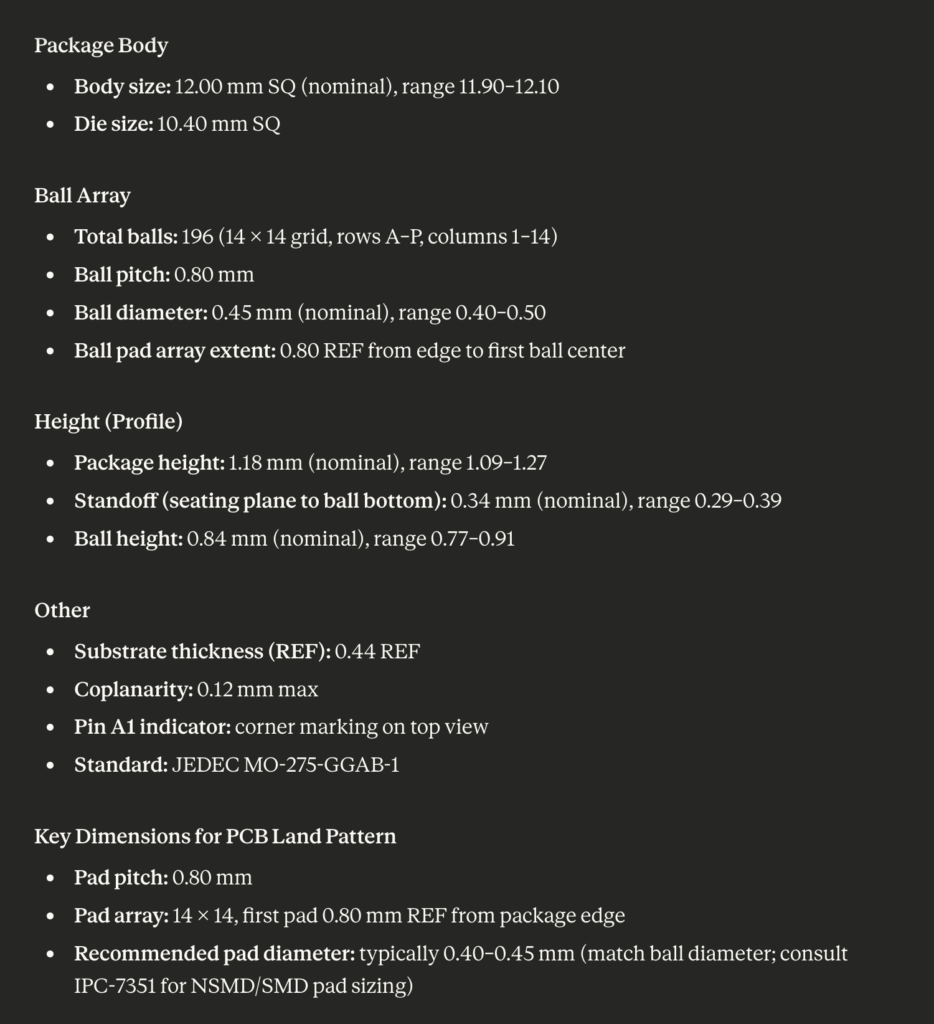

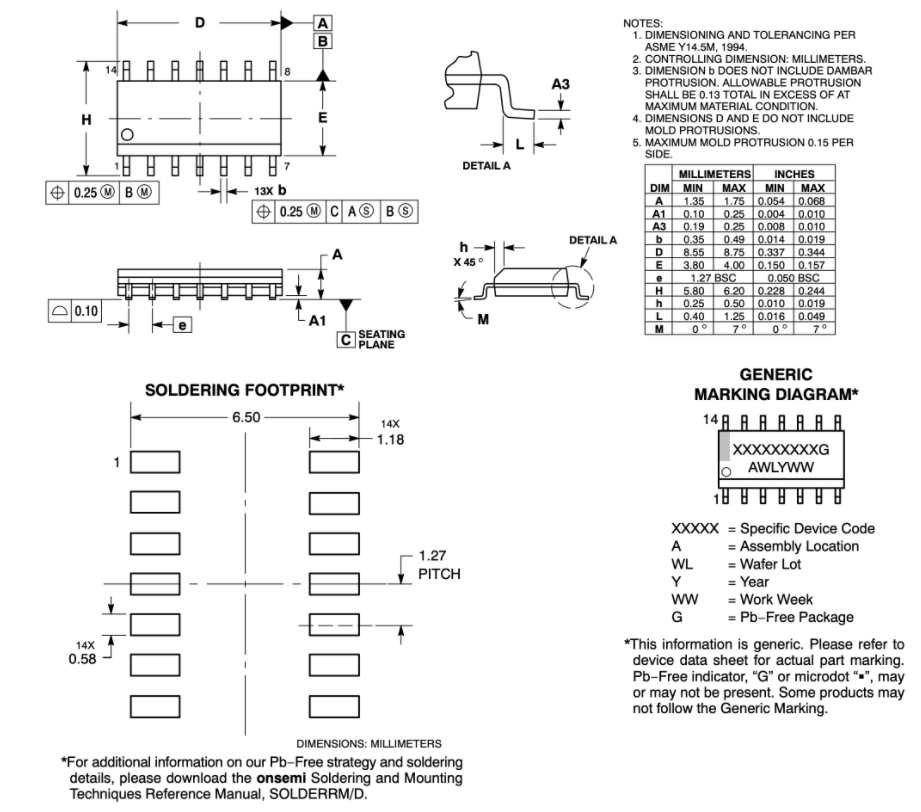

Mechanical Dimensions Extraction

We tested both models on their ability to extract dimensions from mechanical drawings, using both a detailed text prompt and an image-based input.

- Standard packages tested: BGA, QFN, QFP, SOIC, SON, SOP

- Total dimensions to extract: 92 fields

- Detailed text prompt:

- Image-based input:

| Metric | Gemini 3 Deep Think | Claude Opus 4.6 |

|---|---|---|

| Overall accuracy | 84% | 92% |

| Correct dimensions extracted | 77 / 92 | 85 / 92 |

| Complete datasets extracted | 3 / 6 packages | 4 / 6 packages |

| Missing fields | Yes | No |

| Assumed tolerances | Yes | No |

| Incorrect values | 1 field | 7 fields |

Providing a detailed prompt or an image input produced similar results in our testing.

Gemini 3 Deep Think generally extracts dimensions correctly, but not consistently. It sometimes does not extract values for some required fields or assumes tolerances that were not specified.

Claude Opus 4.6 performed better overall, it did not have missing fields or assumed tolerances; however, some fields contained incorrect extracted data. In contrast, Gemini had fewer outright incorrect values but struggled more with missing data and assumed values.

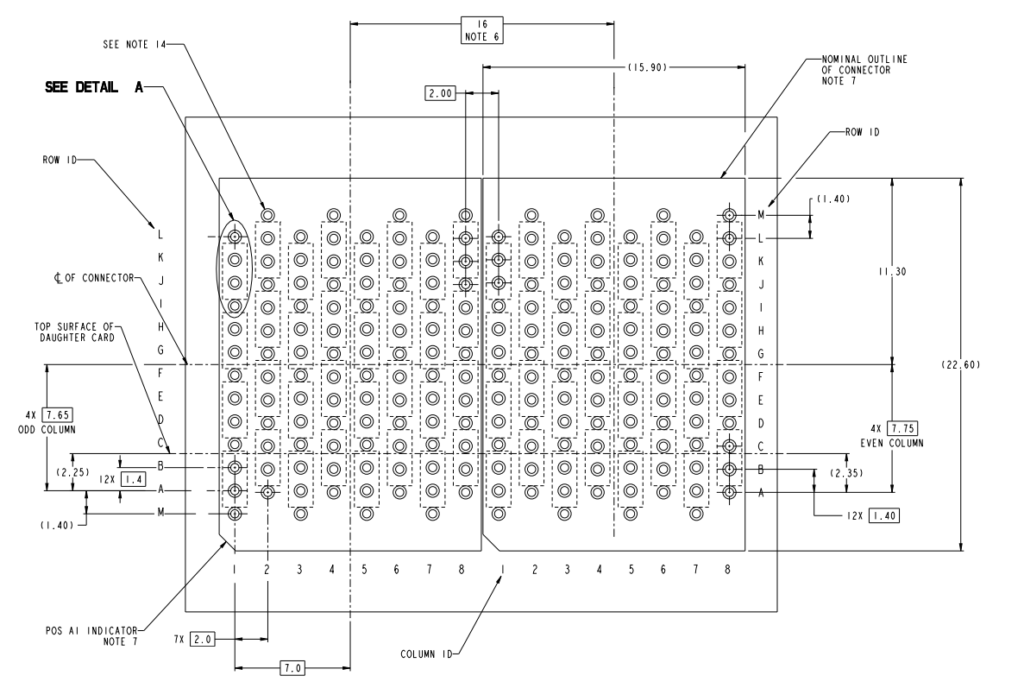

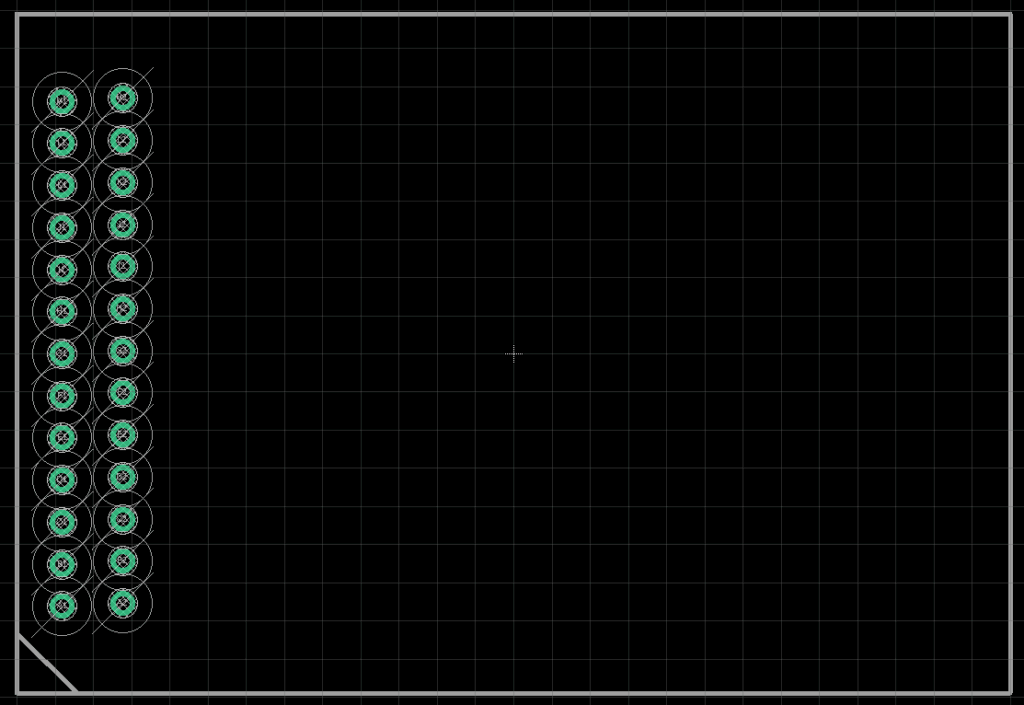

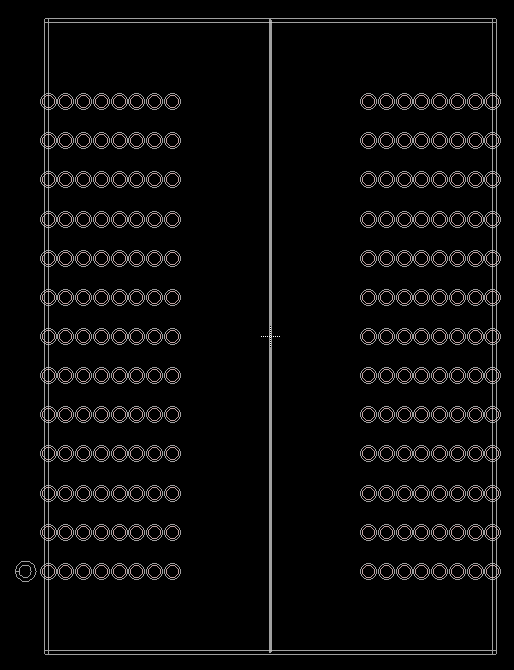

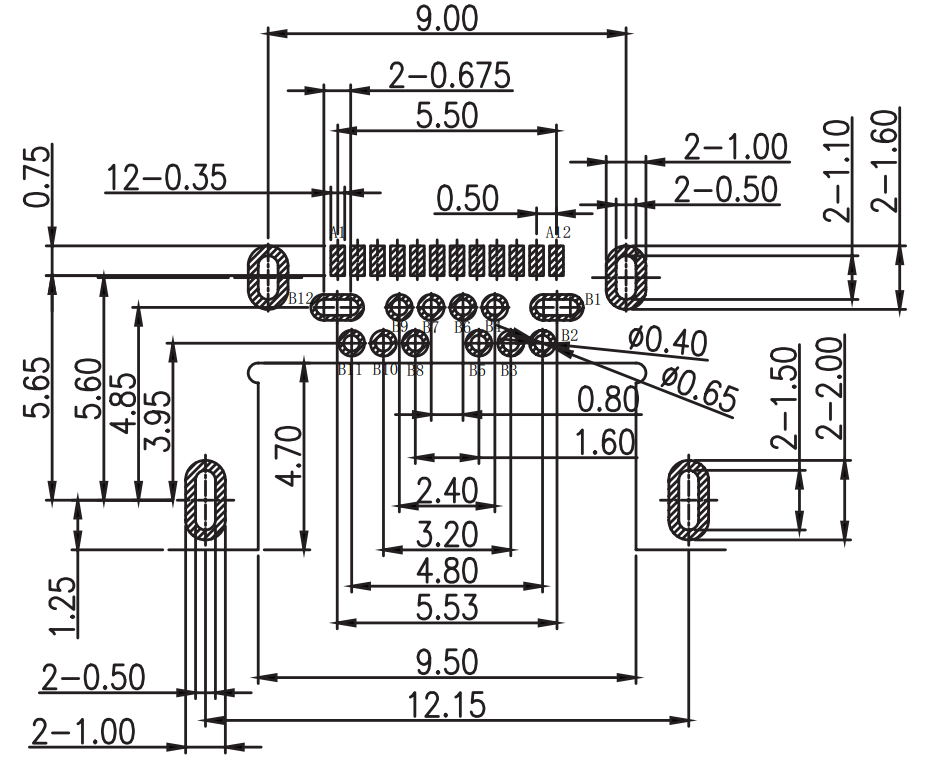

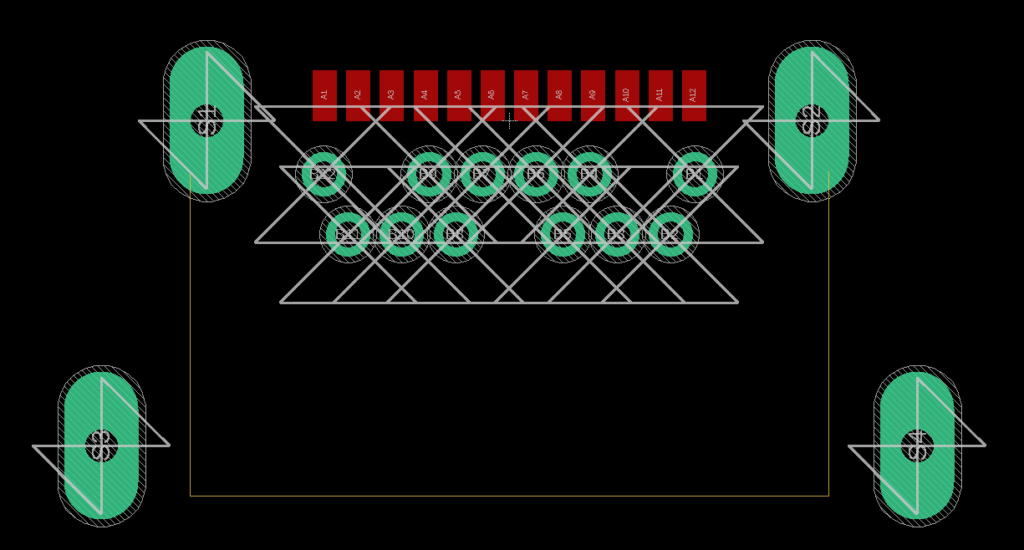

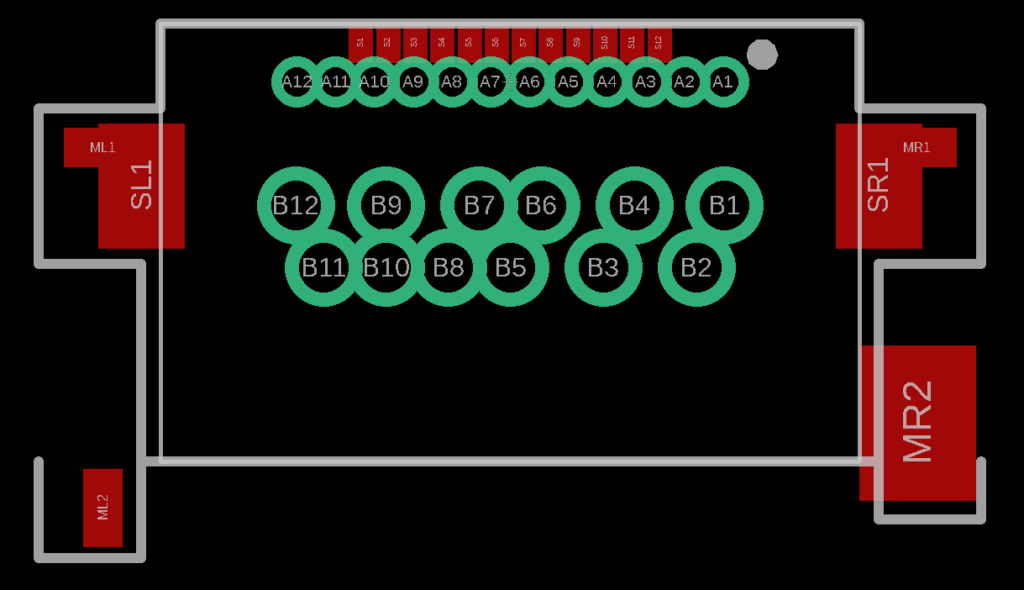

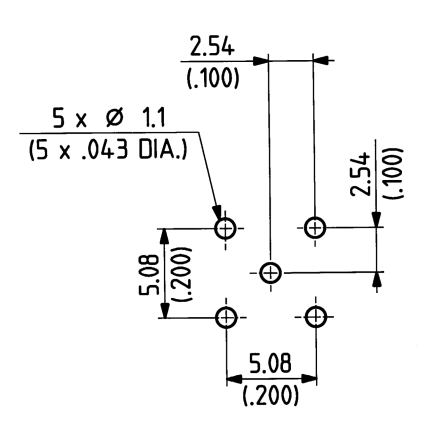

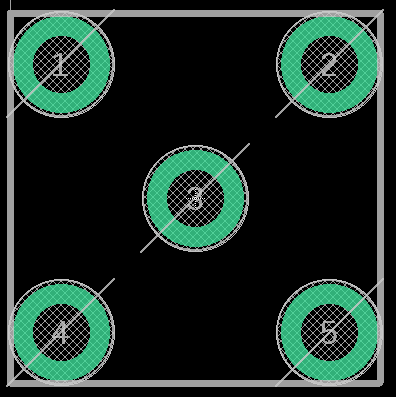

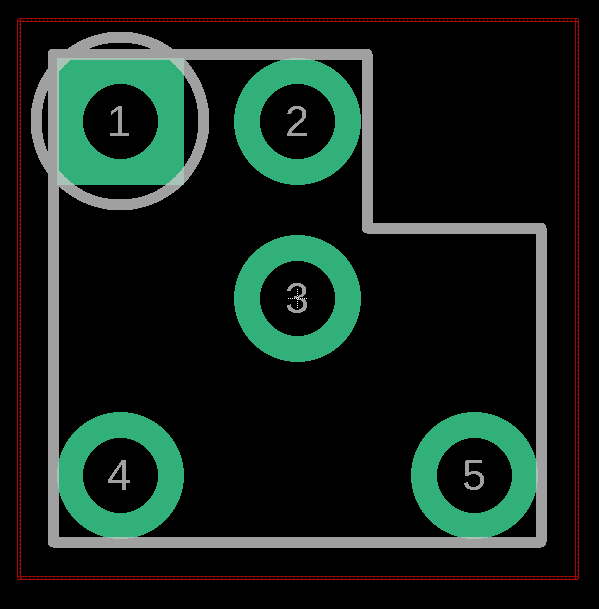

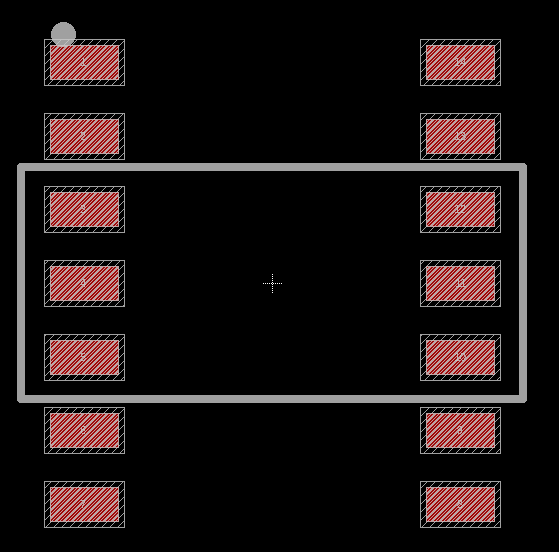

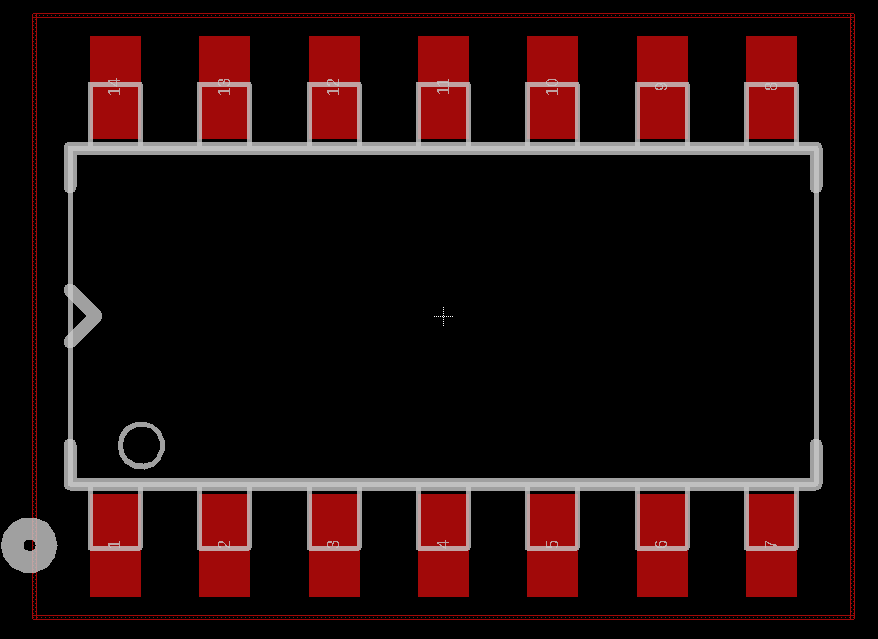

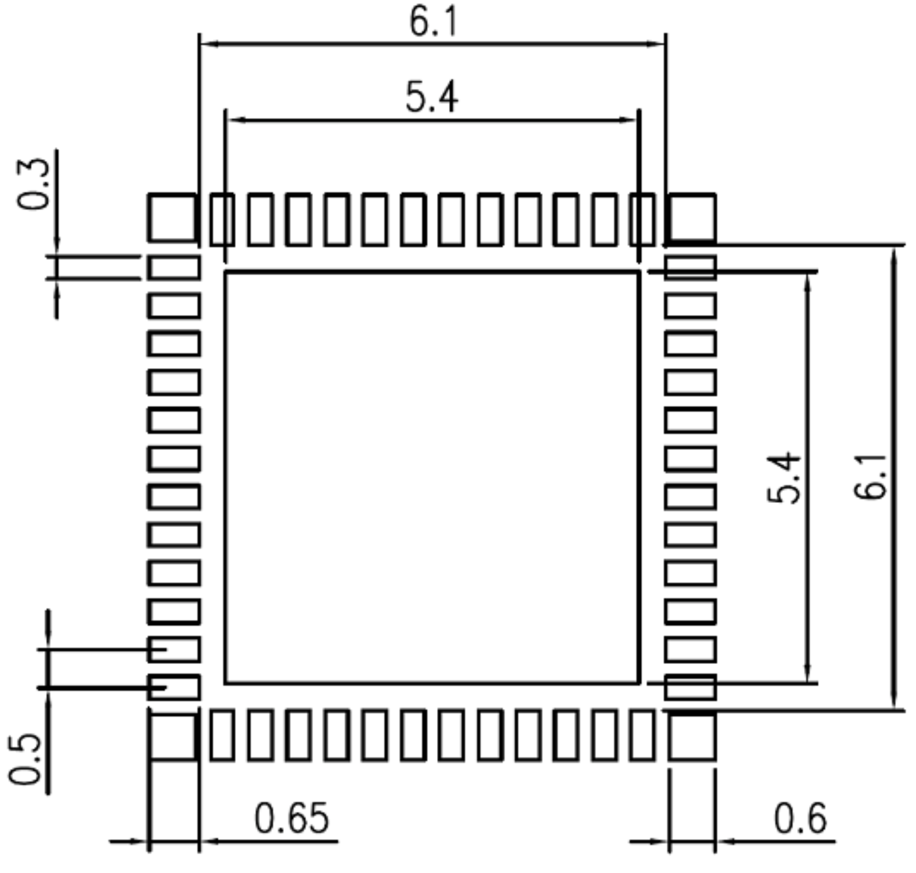

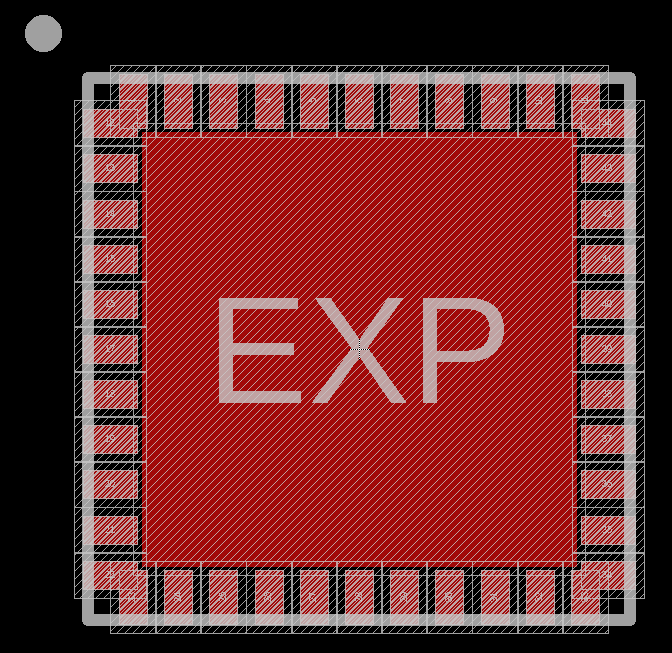

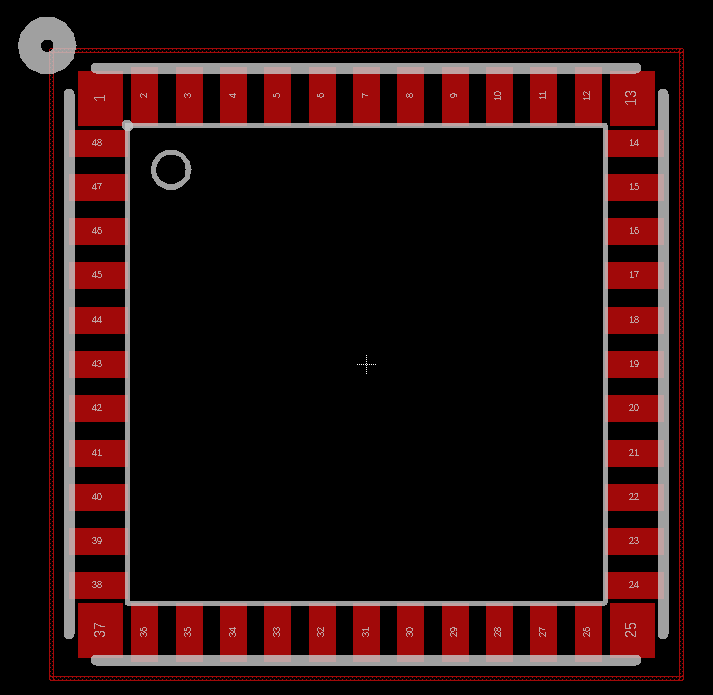

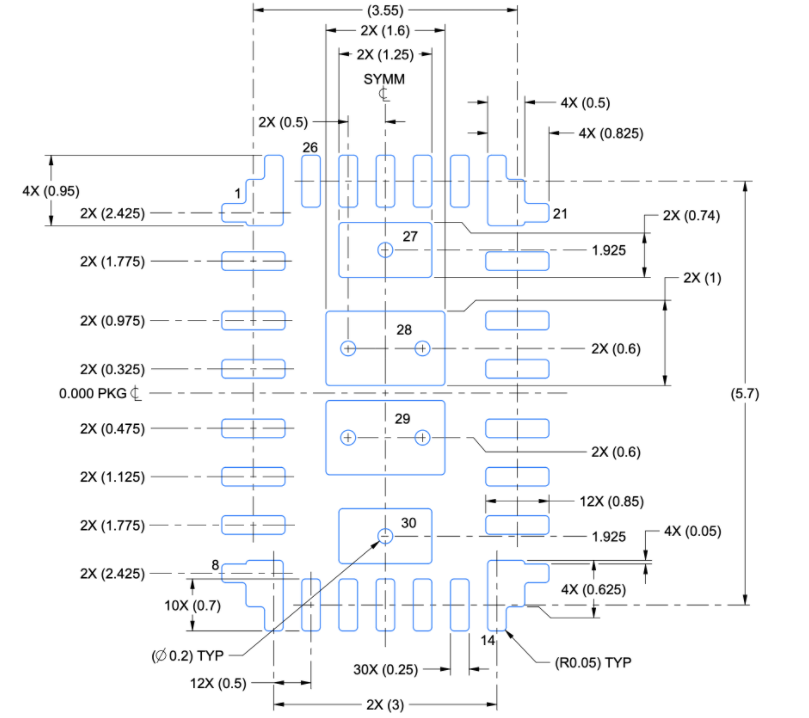

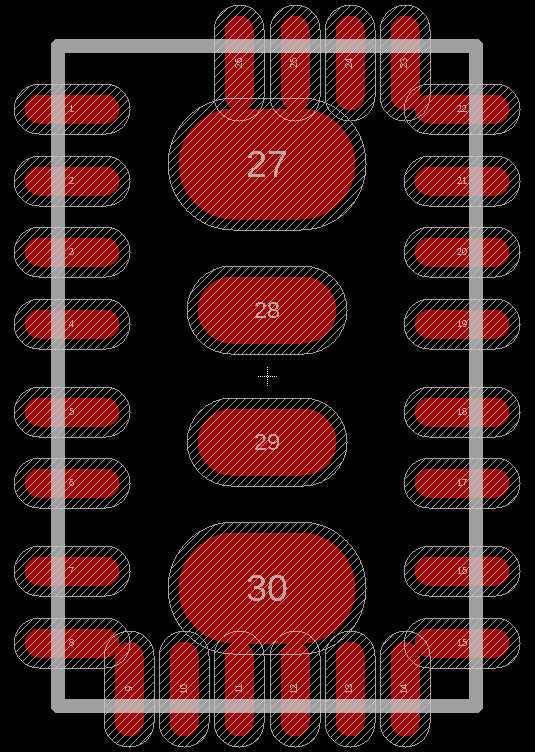

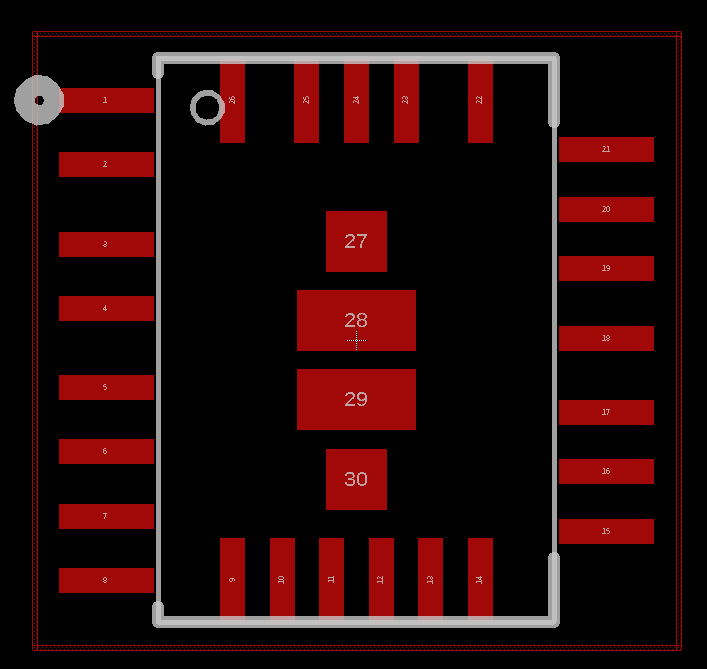

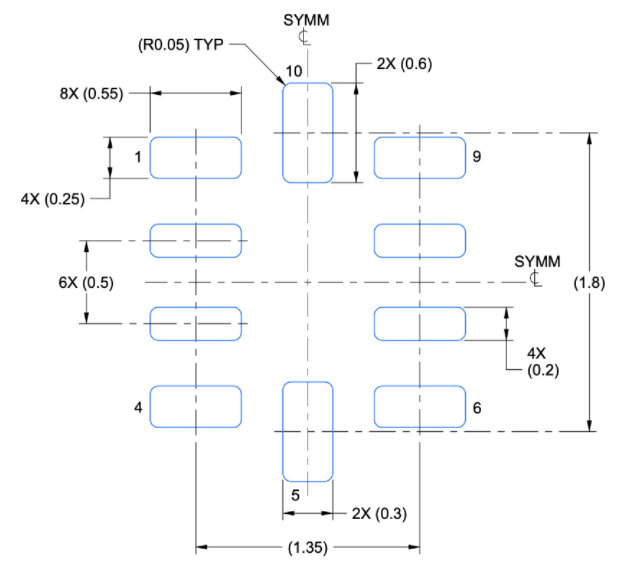

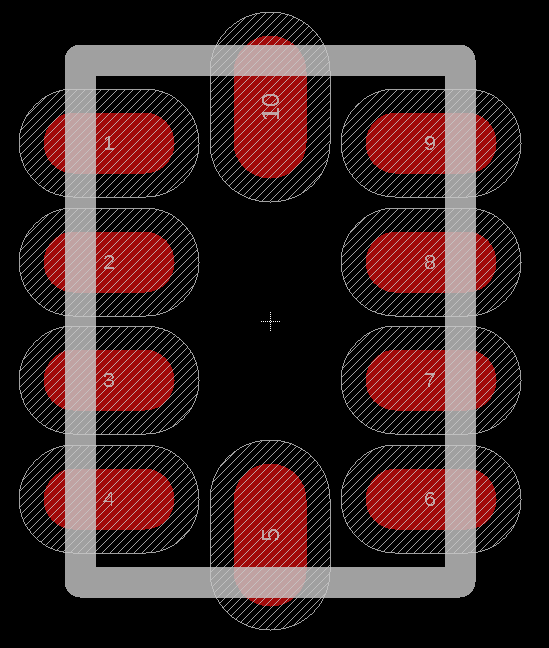

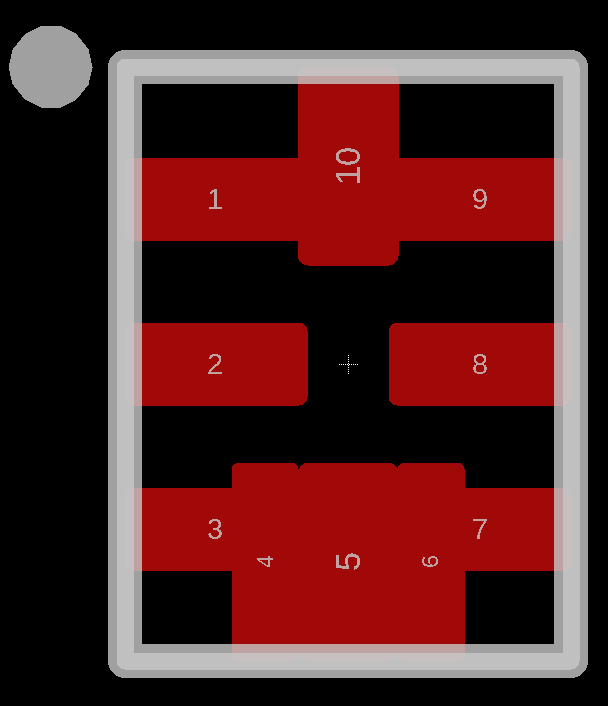

Custom Footprint Shape Reproduction

We tested simple, moderate, and complex footprint designs to assess both geometric accuracy and dimensional scaling precision.

- Simple design

- Moderate design

- Complex design

| Design Elements | Gemini 3 Deep Think | Claude Opus 4.6 |

|---|---|---|

| Simple and symmetrical | ❌ | ❌ |

| Moderate complexity | ❌ | ❌ |

| Complex patterns | ❌ | ❌ |

| Multiple pad dimensions | ❌ | ❌ |

| Slots and cutouts | ❌ | ❌ |

| Restricted areas | ❌ | ❌ |

Both models handle symmetrical, simple patterns well depending on how it was drawn in the datasheets which shows inconsistent results (sometimes good, sometimes bad). Accuracy drops when a pattern contains three or more pad-size variations and values often get interchanged. The models also struggle with proper slot formation, cutouts, and restricted areas. Custom or irregular shapes are not reliably translated, whether a drawing file was generated (.DXF) or a CAD format output (.lbr/.Intlib etc.).

From our tests, it’s clear that the biggest bottleneck in automating footprint generation is accurately capturing the exact shape of the patterns with correct scaling which remains unresolved, even in the latest AI models.

Conclusion

| Category | Gemini 3 Deep Think | Claude Opus 4.6 |

|---|---|---|

| Pinout handling | Basic | Stronger |

| Dimension extraction | Good but inconsistent | More accurate overall |

| Shape accuracy | Weak for complex designs | Weak for complex designs |

| Production-ready without human review | No | No |

Comparing Gemini 3 Deep Think and Claude Opus 4.6, both show promising results in extracting dimensions, but producing exact shapes and a fully functional CAD library is still out of reach.

Technology moves fast, and AI is evolving rapidly. At SnapMagic, we adapt our workflows alongside these innovations, but accuracy and quality remain our top priorities. Engineers need CAD models they can trust 100%, and for now, that level of certainty is something AI alone cannot guarantee.

P.S. We’re looking for engineers who enjoy pushing LLMs into the real world, all the way down to PCB design. If you love working across AI and hardware, contact us at [email protected].